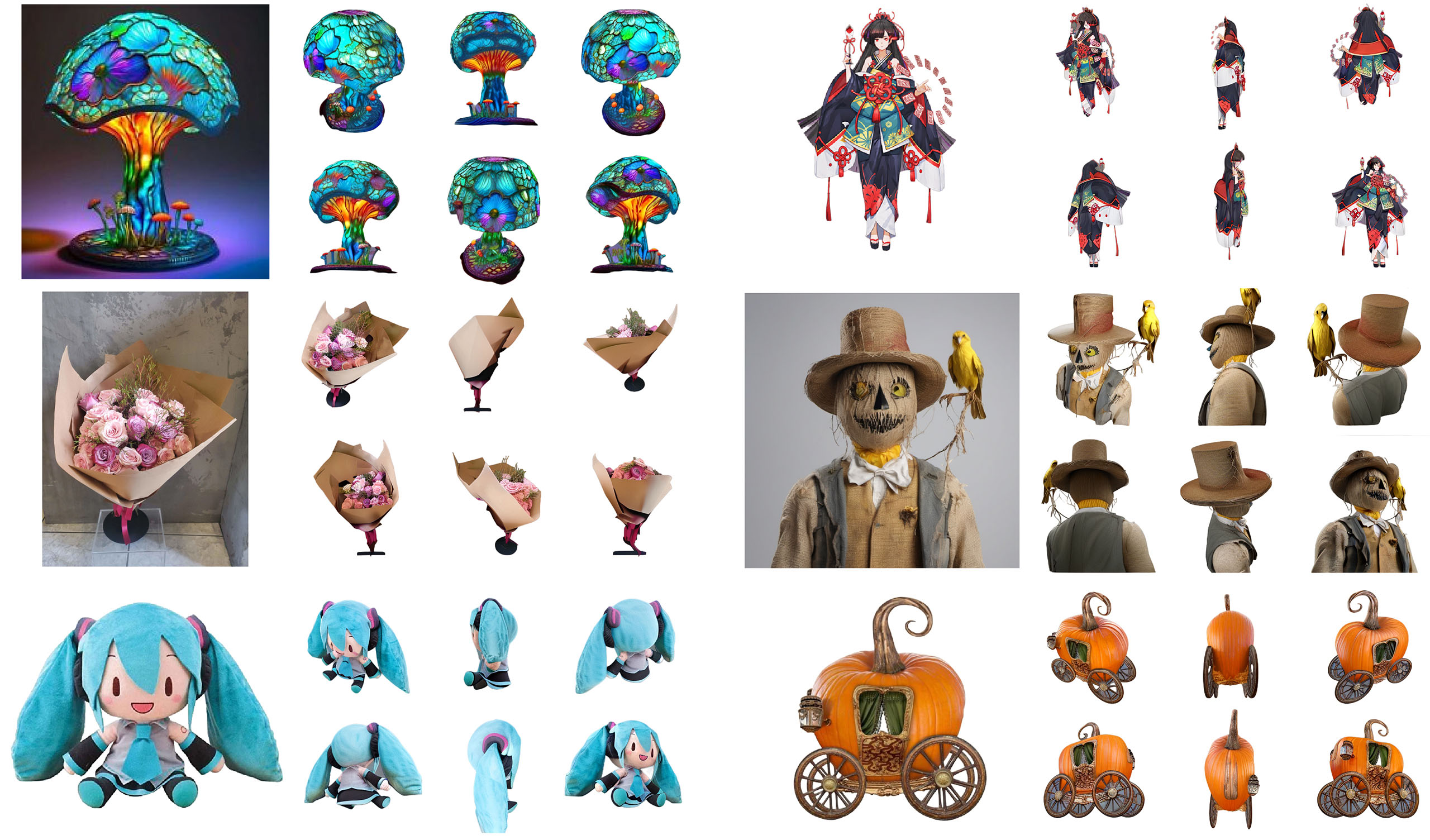

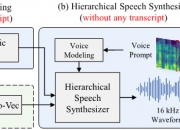

Zero123++,这是一种图像条件扩散模型,用于从单个输入视图生成 3D 一致的多视图图像。为了充分利用预训练的 2D 生成先验,我们开发了各种条件和训练方案,以最大限度地减少现成图像扩散模型(例如稳定扩散)的微调工作。Zero123++ 擅长从单个图像生成高质量、一致的多视图图像,克服纹理退化和几何错位等常见问题。此外,我们展示了在 Zero123++ 上训练 ControlNet 以增强对生成过程的控制的可行性。

We report Zero123++, an image-conditioned diffusion model for generating 3D-consistent multi-view images from a single input view. To take full advantage of pretrained 2D generative priors, we develop various conditioning and training schemes to minimize the effort of finetuning from off-the-shelf image diffusion models such as Stable Diffusion. Zero123++ excels in producing high-quality, consistent multi-view images from a single image, overcoming common issues like texture degradation and geometric misalignment. Furthermore, we showcase the feasibility of training a ControlNet on Zero123++ for enhanced control over the generation process.

论文下载:https://arxiv.org/pdf/2310.15110v1.pdf

项目源代码:https://github.com/SUDO-AI-3D/zero123plus

版权声明

本文仅代表作者观点,不代表本网站立场。

本文系作者授权本网站发表,未经许可,不得转载。

发表评论